Introduction

Are you trying to compare price of products across websites? Are you trying tomonitor price changes every hour? Or planning to do some text mining orsentiment analysis on reviews of products or services? If yes, how would you dothat? How do you get the details available on the website into a format inwhich you can analyse it?

- Can you copy/paste the data from their website?

- Can you see some save button?

- Can you download the data?

$(webscrapingexample) pip install -r setup.py Import Required Modules Within the folder we created earlier, create a webscrapingexample.py file and include the following code snippets.

- In this video tutorial you will learn how to write standard web scraping commands in R, filter timely data based on time diffs, analyze or summarize key info.

- In this post, we will learn about web scraping using R. Below is a video tutorial which covers the intial part of this post. The slides used in the above video tutorial can be found here. What exactly is web scraping or web mining or web harvesting? It is a technique for extracting data from websites.

- Oct 09, 2018 A neural network is a computational system that creates predictions based on existing data. Let us train and test a neural network using the neuralnet library in R. How To Construct A Neural Network? A neural network consists of: Input layers: Layers that take inputs based on existing data Hidden layers: Layers that use backpropagation.

Hmmm.. If you have these or similar questions on your mind, you have come tothe right place. In this post, we will learn about web scraping using R. Belowis a video tutorial which covers the intial part of this post.

The slides used in the above video tutorial can be foundhere.

The What?

What exactly is web scraping or web mining or web harvesting? It is atechnique for extracting data from websites. Remember, websites contain wealthof useful data but designed for human consumption and not data analysis. Thegoal of web scraping is to take advantage of the pattern or structure of webpages to extract and store data in a format suitable for data analysis.

The Why?

Now, let us understand why we may have to scrape data from the web.

- Data Format: As we said earlier, there is a wealth of data on websitesbut designed for human consumption. As such, we cannot use it for data analysisas it is not in a suitable format/shape/structure.

- No copy/paste: We cannot copy & paste the data into a local file. Even ifwe do it, it will not be in the required format for data analysis.

- No save/download: There are no options to save/download the required datafrom the websites. We cannot right click and save or click on a download buttonto extract the required data.

- Automation: With web scraping, we can automate the process of dataextraction/harvesting.

Web Scraping Tools

The How?

- robots.txt: One of the most important and overlooked step is to check therobots.txt file to ensure that we have the permission to access the webpage without violating any terms or conditions. In R, we can do this using therobotstxtby rOpenSci.

- Fetch: The next step is to fetch the web page using thexml2package and store it so that we can extract the required data. Remember, youfetch the page once and store it to avoid fetching multiple times as it maylead to your IP address being blocked by the owners of the website.

- Extract/Store/Analyze: Now that we have fetched the web page, we will uservest to extract thedata and store it for further analysis.

Use Cases

Below are few use cases of web scraping:

- Contact Scraping: Locate contact information including email addresses,phone numbers etc.

- Monitoring/Comparing Prices: How your competitors price their products,how your prices fit within your industry, and whether there are anyfluctuations that you can take advantage of.

- Scraping Reviews/Ratings: Scrape reviews of product/services and use itfor text mining/sentiment analysis etc.

Things to keep in mind…

- Static & Well Structured: Web scraping is best suited for static & wellstructured web pages. In one of our case studies, we demonstrate how badlystructured web pages can hamper data extraction.

- Code Changes: The underling HTML code of a web page can change anytimedue to changes in design or for updating details. In such case, your scriptwill stop working. It is important to identify changes to the web page andmodify the web scraping script accordingly.

- API Availability: In many cases, an API (application programming interface)is made available by the service provider or organization. It is alwaysadvisable to use the API and avoid web scraping. Thehttr package has anice introduction on interacting with APIs.

- IP Blocking: Do not flood websites with requests as you run the risk ofgetting blocked. Have some time gap between request so that your IP address innot blocked from accessing the website.

- robots.txt: We cannot emphasize this enough, always review therobots.txt file to ensure you are not violating any terms and conditions.

Case Studies

- IMDB top 50 movies: In this case study we will scrape the IMDB websiteto extract the title, year of release, certificate, runtime, genre, rating,votes and revenue of the top 50 movies.

- Most visited websites: In this case study, we will look at the 50 mostvisited websites in the world including the category to which they belong,average time on site, average pages browsed per vist and bounce rate.

- List of RBI governors : In this final case study, we will scrape the listof RBI Governors from Wikipedia, and analyze the background from which theycame i.e whether there were more economists or bureaucrats?

HTML Basics

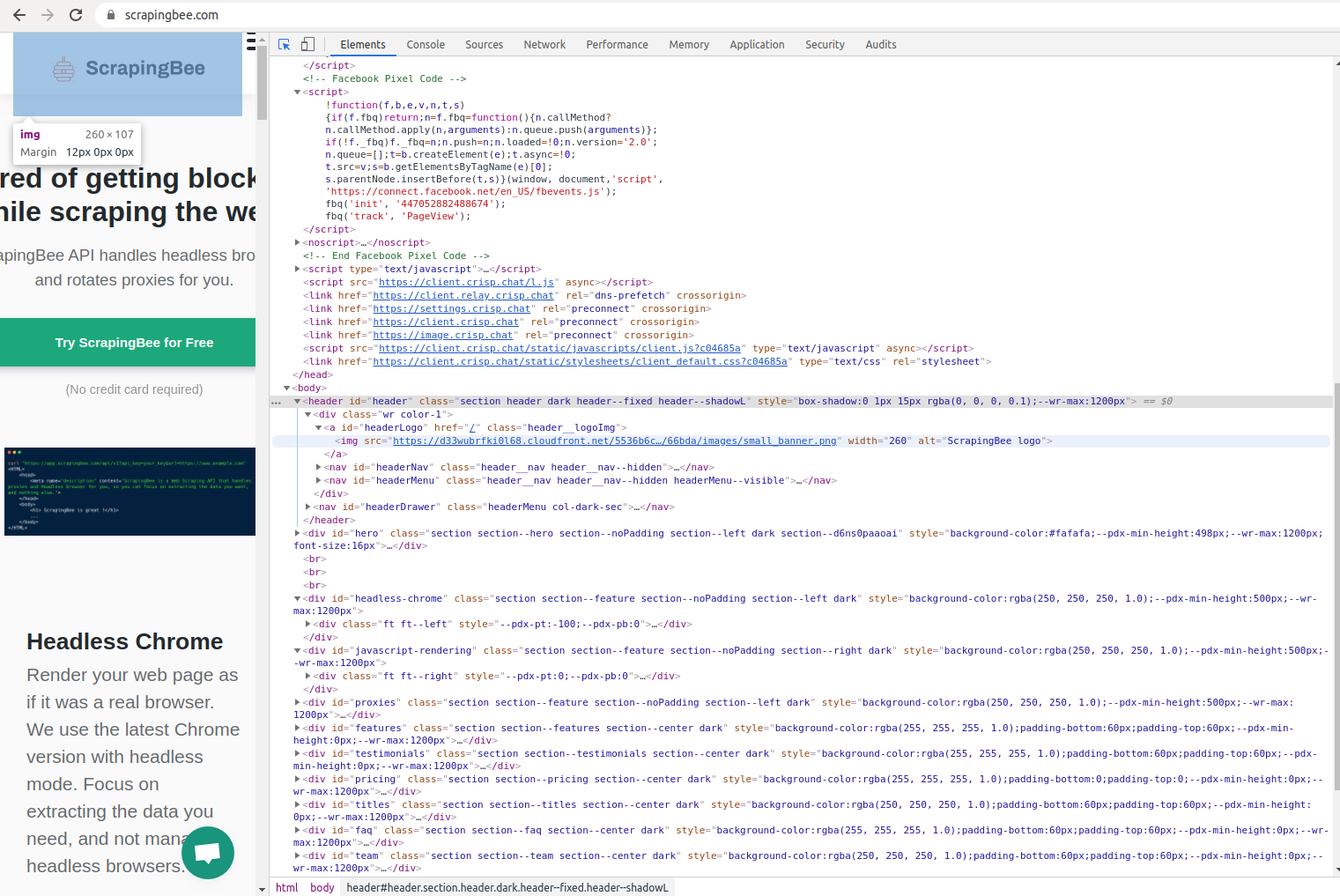

To be able to scrape data from websites, we need to understand how the webpages are structured. In this section, we will learn just enough HTML to beable to start scraping data from websites.

HTML, CSS & JAVASCRIPT

A web page typically is made up of the following:

- HTML (Hyper Text Markup Language) takes care of the content. You need tohave a basic knowledge of HTML tags as the content is located with these tags.

- CSS (Cascading Style Sheets) takes care of the appearance of the content.While you don’t need to look into the CSS of a web page, you should be able toidentify the id or class that manage the appearance of content.

- JS (Javascript) takes care of the behavior of the web page.

HTML Element

HTML element consists of a start tag and end tag with content inserted inbetween. They can be nested and are case insensitive. The tags can haveattributes as shown in the above image. The attributes usually come asname/value pairs. In the above image, class is the attribute name whileprimary is the attribute value. While scraping data from websites in thecase study, we will use a combination of HTML tags and attributes to locatethe content we want to extract. Below is a list of basic and important HTMLtags you should know before you get started with web scraping.

DOM

DOM (Document Object Model) defines the logical structure of a documentand the way it is accessed and manipulated. In the above image, you can seethat HTML is structured as a tree and you trace path to any node or tag. Wewill use a similar approach in our case studies. We will try to trace thecontent we intend to extract using HTML tags and attributes. If the web pageis well structured, we should be able to locate the content using a uniquecombination of tags and attributes.

HTML Attributes

- all HTML elements can have attributes

- they provide additional information about an element

- they are always specified in the start tag

- usually come in name/value pairs

The class attribute is used to define equal styles for elements with sameclass name. HTML elements with same class name will have the same format andstyle. The id attribute specifies a unique id for an HTML element. It can beused on any HTML element and is case sensitive. The style attribute sets thestyle of an HTML element.

Libraries

We will use the following R packages in this tutorial.

IMDB Top 50

In this case study, we will extract the following details of the top 50 moviesfrom the IMDB website:

- title

- year of release

- certificate

- runtime

- genre

- rating

- votes

- revenue

robotstxt

Let us check if we can scrape the data from the website using paths_allowed()from robotstxt package.

Since it has returned TRUE, we will go ahead and download the web page usingread_html() from xml2 package.

Title

As we did in the previous case study, we will look at the HTML code of the IMDBweb page and locate the title of the movies in the following way:

- hyperlink inside

<h3>tag - section identified with the class

.lister-item-content

In other words, the title of the movie is inside a hyperlink (<a>) whichis inside a level 3 heading (<h3>) within a section identified by the class.lister-item-content.

Year of Release

The year in which a movie was released can be located in the following way:

<span>tag identified by the class.lister-item-year- nested inside a level 3 heading (

<h3>) - part of section identified by the class

.lister-item-content

If you look at the output, the year is enclosed in round brackets and is acharacter vector. We need to do 2 things now:

- remove the round bracket

- convert year to class

Dateinstead of character

We will use str_sub() to extract the year and convert it to Date usingas.Date() with the format %Y. Finally, we use year() from lubridatepackage to extract the year from the previous step.

Certificate

The certificate given to the movie can be located in the following way:

<span>tag identified by the class.certificate- nested inside a paragraph (

<p>) - part of section identified by the class

.lister-item-content

Runtime

The runtime of the movie can be located in the following way:

<span>tag identified by the class.runtime- nested inside a paragraph (

<p>) - part of section identified by the class

.lister-item-content

If you look at the output, it includes the text min and is of typecharacter. We need to do 2 things here:

- remove the text min

- convert to type

numeric

We will try the following:

- use

str_split()to split the result using space as a separator - extract the first element from the resulting list using

map_chr() - use

as.numeric()to convert to a number

Genre

The genre of the movie can be located in the following way:

<span>tag identified by the class.genre- nested inside a paragraph (

<p>) - part of section identified by the class

.lister-item-content

The output includes n and white space, both of which will be removed usingstr_trim().

Rating

The rating of the movie can be located in the following way:

- part of the section identified by the class

.ratings-imdb-rating - nested within the section identified by the class

.ratings-bar - the rating is present within the

<strong>tag as well as in thedata-valueattribute - instead of using

html_text(), we will usehtml_attr()to extract thevalue of the attributedata-value

Try using html_text() and see what happens! You may include the <strong> tagor the classes associated with <span> tag as well.

Since rating is returned as a character vector, we will use as.numeric() toconvert it into a number.

XPATH

To extract votes from the web page, we will use a different technique. In thiscase, we will use xpath and attributes to locate the total number ofvotes received by the top 50 movies.

xpath is specified using the following:

- tab

- attribute name

- attribute value

Votes

In case of votes, they are the following:

metaitempropratingCount

Next, we are not looking to extract text value as we did in the previous examplesusing html_text(). Here, we need to extract the value assigned to thecontent attribute within the <meta> tag using html_attr().

R Web Crawler

Finally, we convert the votes to a number using as.numeric().

Revenue

We wanted to extract both revenue and votes without using xpath but the wayin which they are structured in the HTML code forced us to use xpath toextract votes. If you look at the HTML code, both votes and revenue are locatedinside the same tag with the same attribute name and value i.e. there is nodistinct way to identify either of them.

In case of revenue, the xpath details are as follows:

<span>namenv

Next, we will use html_text() to extract the revenue.

To extract the revenue as a number, we need to do some string hacking asfollows:

- extract values that begin with

$ - omit missing values

- convert values to character using

as.character() - append NA where revenue is missing (rank 31 and 47)

- remove

$andM - convert to number using

as.numeric()

Top Websites

Unfortunately, we had to drop this case study as the HTML code changed while wewere working on this blog post. Remember, the third point we mentioned in thethings to keep in mind, where we had warned that the design or underlying HTMLcode of the website may change. It just happened as we were finalizing thispost.

RBI Governors

In this case study, we are going to extract the list ofRBI (Reserve Bank of India) Governors. The author of this blog post comes froman Economics background and as such was intereseted in knowing the professionalbackground of the Governors prior to their taking charge at India’s centralbank. We will extact the following details:

- name

- start of term

- end of term

- term (in days)

- background

robotstxt

Let us check if we can scrape the data from Wikipedia website usingpaths_allowed() from robotstxt package.

Since it has returned TRUE, we will go ahead and download the web page usingread_html() from xml2 package.

Rvest Tutorial

List of Governors

The data in the Wikipedia page is luckily structured as a table and we canextract it using html_table().

There are 2 tables in the web page and we are interested in the second table.Using extract2() from the magrittr package, we will extract the tablecontaining the details of the Governors.

Sort

Let us arrange the data by number of days served. The Term in office columncontains this information but it also includes the text days. Let us split thiscolumn into two columns, term and days, using separate() from tidyr andthen select the columns Officeholder and term and arrange it in descendingorder using desc().

Backgrounds

Web Scraping Redfin

What we are interested is in the background of the Governors? Use count()from dplyr to look at the backgound of the Governors and the respectivecounts.

Let us club some of the categories into Bureaucrats as they belong to theIndian Administrative/Civil Services. The missing data will be renamed as No Info.The category Career Reserve Bank of India officer is renamed as RBI Officerto make it more concise.

Hmmm.. So there were more bureaucrats than economists.

Summary

- web scraping is the extraction of data from web sites

- best for static & well structured HTML pages

- review robots.txt file

- HTML code can change any time

- if API is available, please use it

- do not overwhelm websites with requests

To get in depth knowledge of R & data science, you canenroll here for our freeonline R courses.